An Overview and Brief Explanation of Direct Preference Optimization (DPO)

18/04/2024 10:42:53

Direct Preference Optimization (DPO) is fundamentally a streamlined approach for fine-tuning substantial language models such as Mixtral 8x7b, Llama2, and even GPT4. It’s useful because it cuts down on the complexity and resources needed compared to traditional methods. It makes the process of training language models more direct and efficient by using preference data to guide the model’s learning, bypassing the need for creating a separate reward model.

Imagine you’re teaching someone how to cook a complex dish. The traditional method, like Reinforcement Learning from Human Feedback (RLHF), is like giving them a detailed recipe book, asking them to try different recipes, and then refining their cooking based on feedback from a panel of food critics. It’s thorough but time-consuming and requires a lot of trial and error.

Direct Preference Optimization (DPO), on the other hand, is like having a skilled chef beside them who already knows what the final dish should taste like. Instead of trying multiple recipes and getting feedback, the learner adjusts their cooking directly based on the chef’s preferences, which streamlines the learning process. This way, they learn to cook the dish more efficiently, focusing only on what’s necessary to achieve the desired result.

In summary, DPO simplifies and accelerates the process of fine-tuning language models, much like how learning to cook directly from an expert chef can be more efficient than trying and refining multiple recipes on your own.

What is DPO and How Does It Work?

DPO was introduced and prominently featured in the AI and machine learning community in 2023. This method gained attention following its publication and presentation at notable conferences like NeurIPS 2023, where it was recognized for its innovation in the field of fine-tuning large language models.

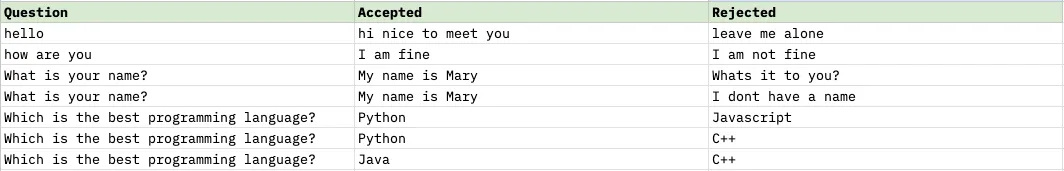

DPO enables precise control over the behavior of LLMs during the fine-tuning process. It consists of two main stages: Supervised Fine-tuning (SFT) and Preference Learning. In the SFT phase, a generic pre-trained language model is fine-tuned using supervised learning on a high-quality dataset specific to the desired tasks, like dialogue, summarization, etc. The Preference Learning phase is where DPO truly differentiates itself. It bypasses the need for fitting a reward model and extensive sampling, instead directly optimizing the language model using preference data. This process uses binary cross-entropy loss to optimize the model, leveraging human preference data to determine preferred responses. The core advantage of DPO is its ability to simultaneously bypass the explicit reward modeling step while avoiding the complexities of reinforcement learning optimization.

Benefits of DPO

- Simplicity and Efficiency: DPO is more straightforward to implement and train compared to RLHF. It eliminates the need for creating a separate reward model and the extensive hyperparameter tuning that is typically required in reinforcement learning-based methods.

- Performance and Stability: Research indicates that DPO can be stable and performant, often exceeding the capabilities of RLHF methods, especially for models with up to 6B parameters. This makes it a viable option for aligning LLMs with specific preferences.

- Resource Optimization: DPO is computationally lightweight, which can be a significant advantage for resource-constrained projects or environments.

Downsides of DPO

Dependence on Quality Preference Data: The success of DPO heavily relies on the quality of preference data used for training. If the preference data is not well-curated or representative, the model may not perform as expected.

Limited Research and Applications: Being a relatively new approach, there may be aspects of DPO that are not fully understood or explored yet compared to more established methods like RLHF.

Potential for Bias: Like any data-driven approach, there’s a risk of inheriting biases present in the preference data.

Examples of DPO Application

DPO has been successfully applied to fine-tune models like Zephyr 7B and Mistral 8x7B. Zephyr 7B, for instance, underwent a three-step fine-tuning process using distilled DPO, which involved large-scale dataset construction, AI Feedback collection, and distilled direct preference optimization. This process resulted in the model performing well on chat-related benchmarks. Similarly, Mistral’s 8x7B model was fine-tuned using DPO for improved instruction, achieving notable performance on benchmarks like MT-Bench.

When selecting a model for chat-based activities, we initially consider DPO-based fine-tuned models due to their superior capabilities.