Top LLM Picks for Coding: Community Recommendations

10/04/2024 21:34:59

LLMs are valuable for coding, helping to generate and discuss code, making it easier for beginners to advance their projects, and simplifying the start of new tasks. For experienced specialists, they serve as an advanced tool, enhancing code optimization and providing innovative solutions to complex problems. Although language models can't replace human insight, they significantly streamline programming tasks.

In this post, we've compiled a list of LLMs recommended for coding, based on user feedback. As the LLM landscape quickly evolves, this information is current. For the latest updates, follow us on LinkedIn, Reddit, or X.

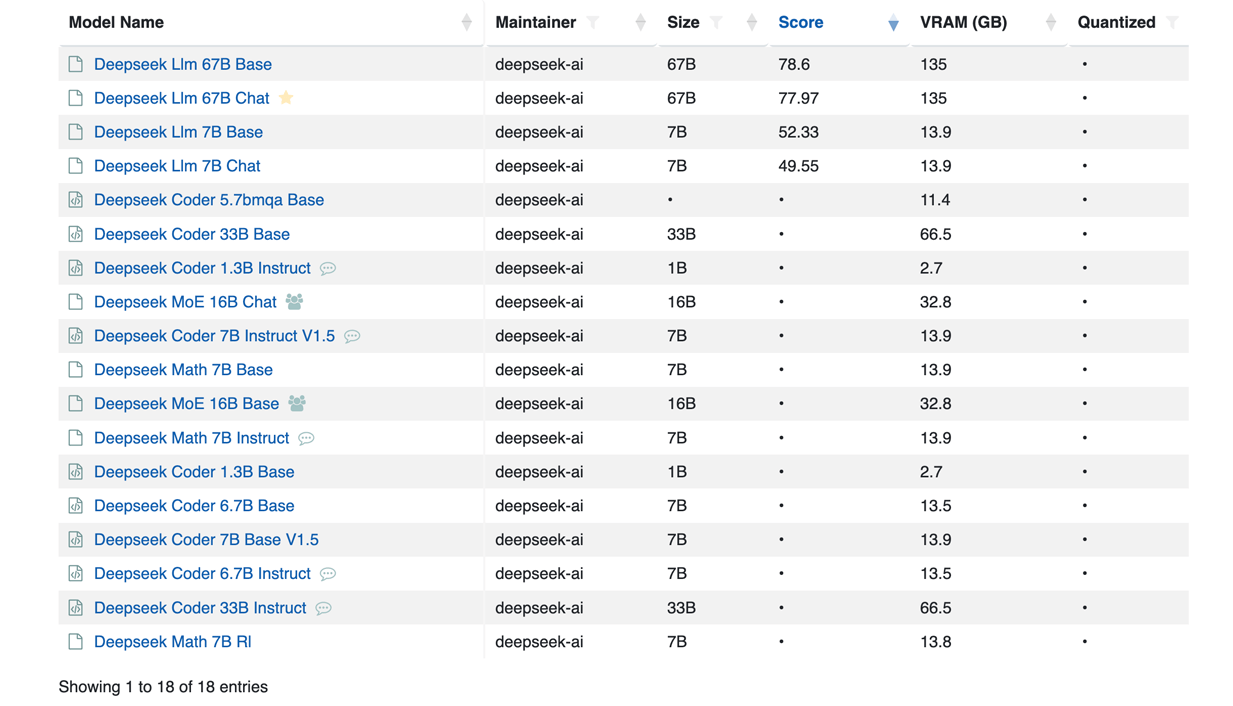

Deepseek LLM 67B Chat

Feedback on DeepSeek models is largely positive, with users praising their code completion capabilities and efficient performance. DeepSeek's fine-tuned versions are noted for excelling in benchmarks, with particular mention of their effectiveness in specific applications. However, there are some concerns regarding their speed in comparison to other models; some users note limitations in speed compared to models like Mistral 7B.

Phind-CodeLlama-34B-v2

Phind-CodeLlama-34B-v2 is another code generation model derived from CodeLlama 34B and further fine-tuned for instruction-based use cases. This version has been enhanced with an additional 1.5 billion tokens of high-quality programming data, making it proficient in multiple languages, including Python, C/C++, TypeScript, and Java. Notably, it's highly praised for its zero-shot capabilities in coding tasks, making it a standout choice for developers seeking to enhance their project workflows.

MagiCoder-6.7b

Turning our focus to Magicoder DS 6.7B, which was developed by Yuxiang Wei et al., we find a model tailored for coding tasks using OSS-Instruct and open-source code. Fine-tuned from DeepSeek-Coder-6.7b-base, it focuses on coding but may falter in other areas. While effective for specific workflows, users should consider potential limitations and biases.

GPT-4

In discussions about GPT-4, feedback has been mixed. Yet, it remains a notable mention for coding tasks.

Dolphincoder Starcoder2 15B

DolphinCoder StarCoder2 15b is a coding-focused AI model derived from StarCoder2-15b. It stands out for its ability in software engineering and programming across various languages. Despite being uncensored and requiring caution in use, especially around ethical considerations, it demonstrates exceptional compliance and versatility in coding tasks. The model, which uses the ChatML prompt format, is recognized for its effectiveness in coding challenges, supported by the contributions of latitude.sh, BigCode, and the Open Source AI community.

Dolphin 2.5 Mixtral 8x7b

Dolphin 2.5 Mixtral 8x7b emerges as a specialized tool for coding challenges, built upon the Mixtral-8x7b foundation. It's enhanced with coding-specific datasets like Dolphin-Coder and MagiCoder, noted for its ability to generate and debug code effectively. While praised for coding capabilities, it requires an ethical use alignment layer due to its uncensored nature. It's recognized for aiding in code completion and providing innovative coding solutions, without detailed hardware requirements discussion.

Refact-1 6B

Refact-1 6B distinguishes itself in the realm of code completion. It supports coding in multiple languages and can handle chat-like coding conversations. It's tailored for generating and completing code across various tasks, focusing mainly on English but can understand other languages in code comments.

Mixtral 8x7B Instruct V0.1

The Mixtral 8x7B Instruct V0.1 model is highly recommended for coding help, acting more like a helpful guide. But, it's better at advising coming up with new code by itself. Some users say that the usual tests don't show how good the model is at creating new solutions.

Mistral 7B Instruct V0.2

Mistral 7B Instruct V0.2 is recognized for its ability to clearly explain code, making it a valuable tool for developers to understand complex code structures. While it shines in breaking down code step by step, it might struggle with more complex code prompts. Despite this, it can generate basic code and helpful one-liners, often performing faster than models like Deepseek. This makes Mistral 7B particularly useful for educational purposes, code reviews, and enhancing programming comprehension.

Hermes-2-Pro-Mistral-10.7B

Hermes-2-Pro-Mistral-10.7B, an upgrade from a 7B model, works well for coding, explaining functions, and handling JSON data. It's versatile, good for many tasks like coding and translating, and it's quite accurate, with 91% success in making function calls and 84% in JSON. It uses a format called ChatML, making it easier to talk to for different needs. People recommend it because it's flexible and performs well for its size.

Phi-2

Phi-2, a 2.7-billion-parameter model, excels in QA, chat, and coding tasks, especially in Python. Known for strong performance in language understanding and logical reasoning, it's ideal for exploring safety in AI research. Suitable for setups with limited VRAM, it's a resource-efficient choice. While useful, outputs should be viewed as suggestions, mindful of potential biases.

OpenCodeInterpreter DS 6.7B

OpenCodeInterpreter DS 6.7B enhances code generation by integrating execution feedback, demonstrating significant improvements in HumanEval and MBPP benchmarks. It's based on deepseek-coder-6.7b-base, showing advancements with each model iteration, particularly when adding execution and synthetic human feedback. This model series, ranging from 1.3B to 70B parameters, offers improved code execution and interpretation capabilities, proven by detailed benchmark comparisons. It is ideal for researchers and developers and supports efficient coding tasks across various scales and complexities.

We hope our information enhances your current coding projects. Please share your experiences with the models you've tried. Leave your feedback on the model's profile on our website. All feedback is welcome!