NuminaMath-7B-TIR: A New Math-Focused Language Model

15/07/2024 11:49:01

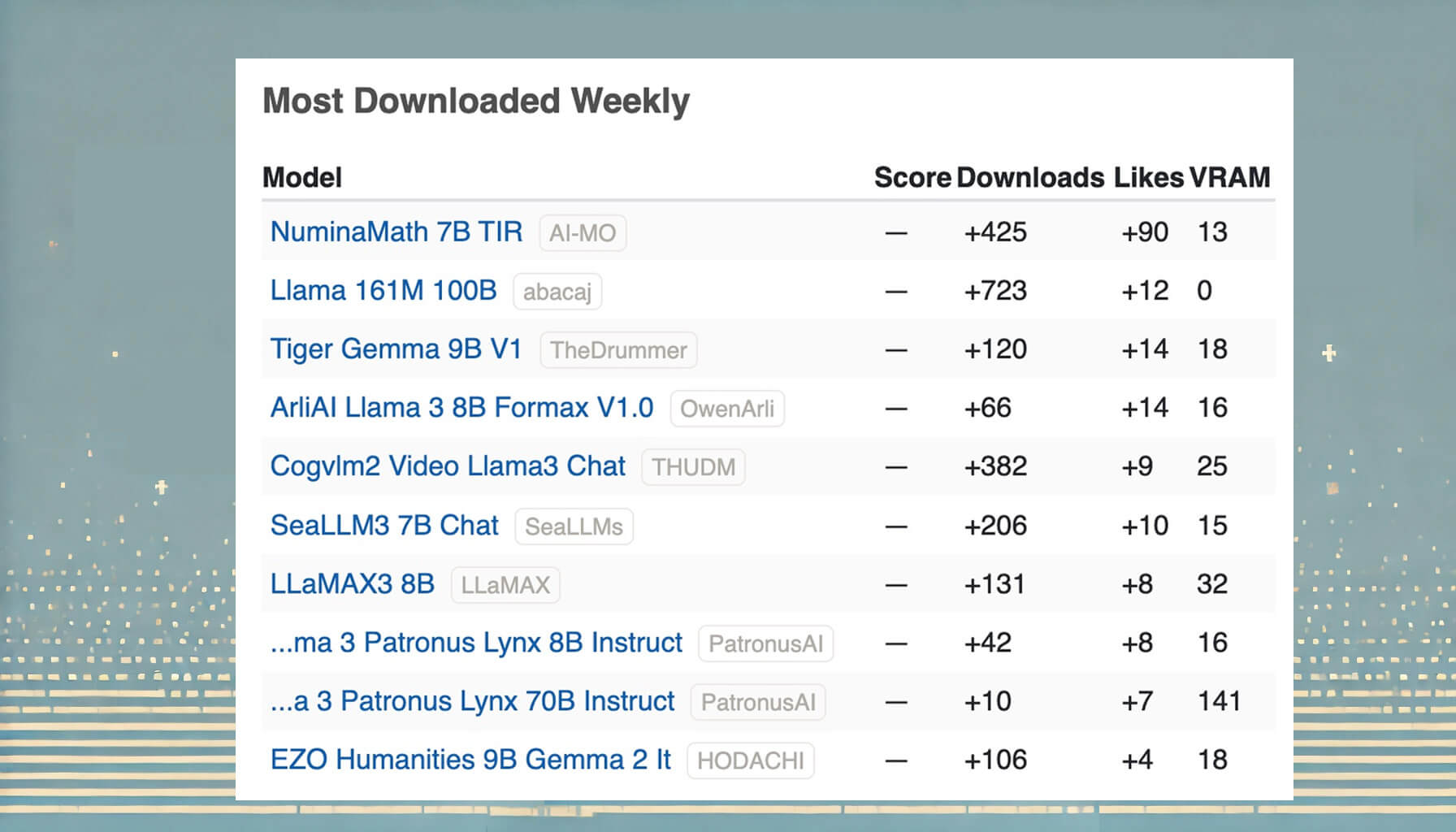

Last week, the Numina team released NuminaMath 7B TIR, a new math-focused language model. It quickly reached the top position in our "Top-Trending LLMs over the Last Week" ranking.

For context, our ranking is based on the number of downloads and likes, using data from Hugging Face and LLM Explorer.

To understand what makes this model stand out, let's examine its key characteristics:

- Built on: deepseek-ai/deepseek-math-7b-base

- Parameters: 6.91 billion

- Key feature: Tool-Integrated Reasoning (TIR) with Python REPL

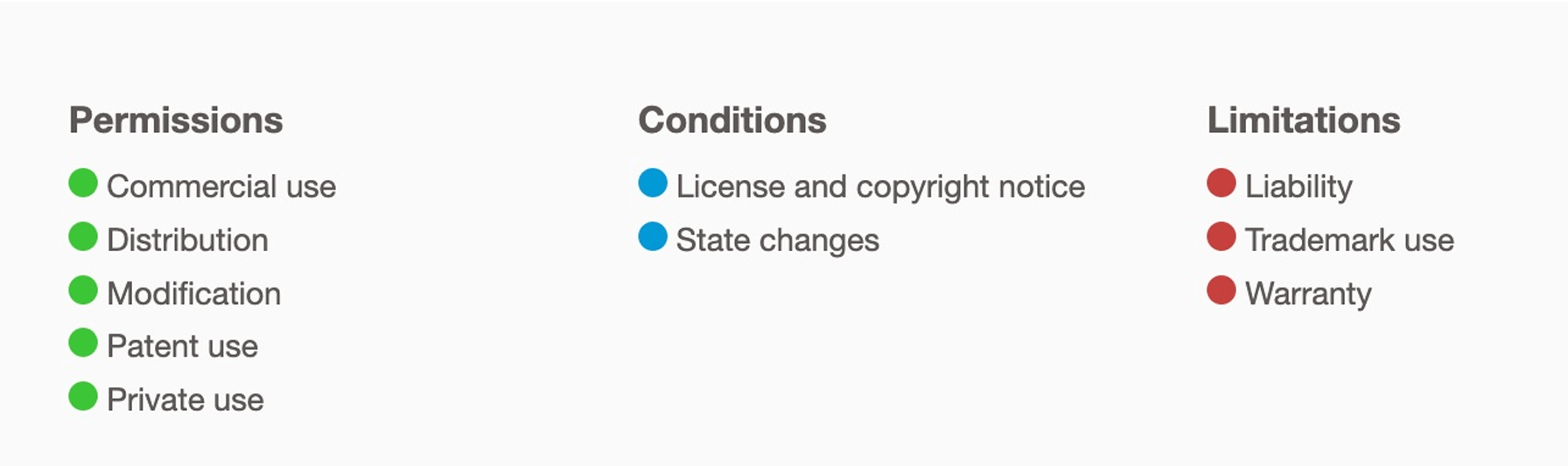

- License: Apache 2.0 (open-source)

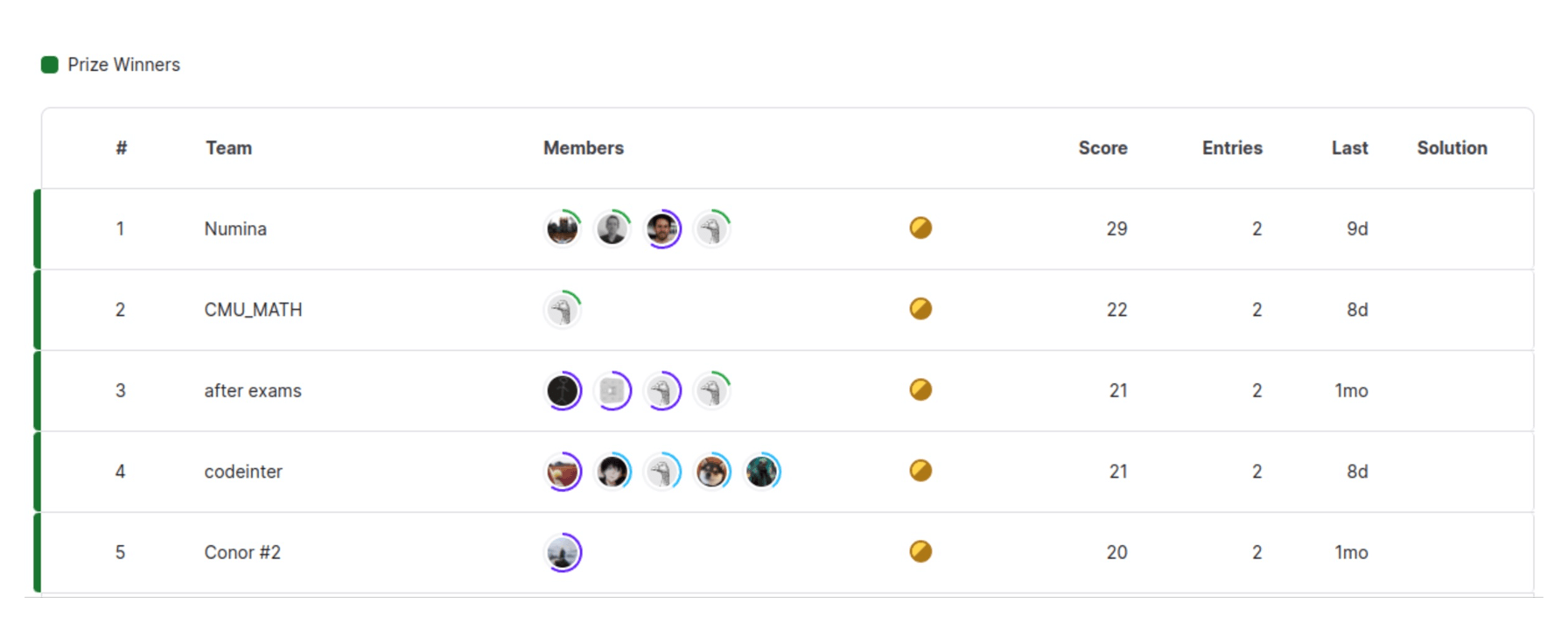

The model's capabilities are evident in its recent achievement: NuminaMath 7B TIR won the first progress prize at the AI Math Olympiad (AIMO) 🔥, scoring 29/50 on the public and private test sets.

Training Process:

- Stage 1: Fine-tuned on a large dataset of math problems with Chain of Thought solutions

- Stage 2: Further fine-tuned on synthetic datasets using tool-integrated reasoning

Problem-Solving Approach:

- Chain of Thought Reasoning

- Python Code Translation

- Python REPL Execution

- Self-Correction (iterates if initial attempt fails)

Training Details:

- Learning rate: 2e-05

- Train batch size: 4 (total 32 across 8 GPUs)

- Optimizer: Adam

- Number of epochs: 4.0

Framework Versions:

- Transformers 4.40.1

- Pytorch 2.3.1

- Datasets 2.18.0

- Tokenizers 0.19.1

The AI community has shown interest in this open-source model's performance in mathematics. Users have noted its potential to combine math and coding skills. It's important to remember that this is a specialized math model, not a general-purpose AI.

For developers: The model performs well with AMC 12 level problems but may face challenges with more advanced problems or geometry. It is not intended for general chat applications.

NuminaMath 7B TIR represents a new development in mathematical language models and may be of interest to AI researchers, mathematicians, and those following advancements in machine learning.

And we can't pass by some lighthearted comments from the Reddit community:

- Can someone explain how significant this is?

- People can finally complete their math homework and maybe do some advanced modeling.

***

- Can this model do RP?

- If you like dirty math problems, maybe..