Open Source LLMs in the Context of Translation

12/06/2024 09:06:34

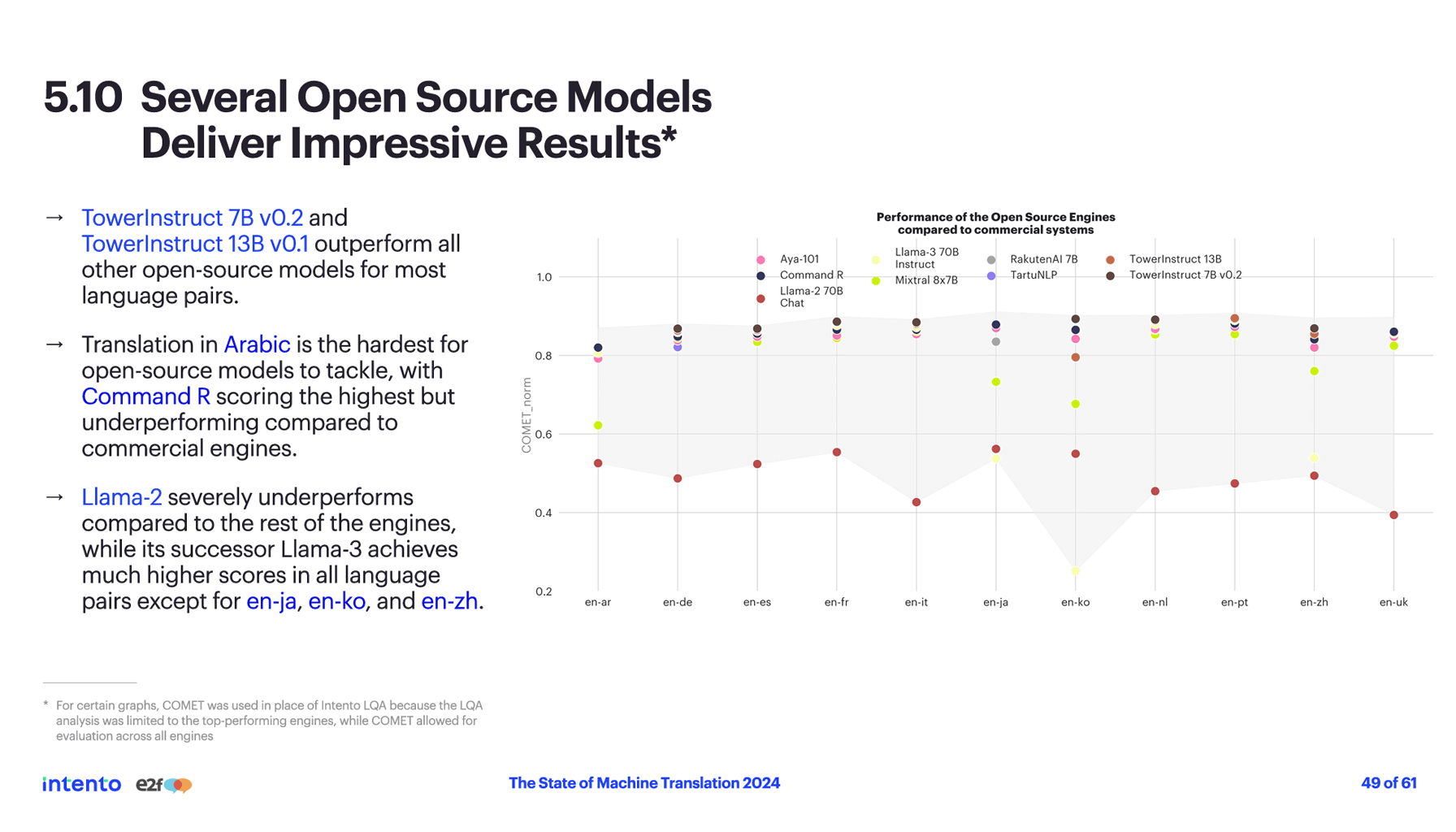

A week ago, the Intento team (a machine translation and multilingual generative AI platform for global enterprise companies) published its 8th annual State of Machine Translation report. The work analyzes 52 MT engines and LLMs across 11 language pairs and 9 content domains. The MT systems and LLMs covered in this report were accessed between March 25 and May 14, 2024. What is interesting is the performance of open-source LLMs in the domain of translation.

Firstly, open-source LLMs such as TowerInstruct, RakutenAI 7B, Neurotõlge, Aya-101, Command R, and Mixtral 8x7B show promising capabilities. TowerInstruct models by Unbabel, based on Llama-2, are designed for translation tasks and perform well in multilingual settings. RakutenAI 7B excels in Japanese and English translation, while Neurotõlge supports both high-resource and low-resource languages, particularly Finno-Ugric languages. Aya-101 by Cohere supports 101 languages, focusing on lower-resourced ones, and Command R excels in reasoning and multilingual generation. Mixtral 8x7B by Mistral AI is noted for high-quality performance in various languages. However, these models generally fall into the second tier compared to commercial engines due to more limited multilingual capabilities.

Despite their potential, open-source LLMs face significant challenges. They are generally 10-100 times less expensive than traditional machine translation (MT) systems but are also 50-1000 times slower, impacting their suitability for real-time applications. Customization through fine-tuning, prompt engineering, and the use of translation memories can enhance their performance.

Open-source models like TowerInstruct 7B and Command R approach top-tier commercial engine performance but often struggle with complex translations, particularly in languages like Arabic.

Overall, while open-source LLMs are cost-effective and show impressive results in certain contexts, they still lag behind commercial models in multilingual capabilities and real-time translation performance.