Small Language Models (SLMs): Hidden Power

17/03/2024 13:37:00In recent years, small language models have sparked considerable interest among AI professionals and enthusiasts alike. Marking a significant shift towards more accessible and adaptable generative AI technologies, SLMs have proven to be highly beneficial for both individuals and organizations. Unlike their larger counterparts, these models are designed to run efficiently on devices with limited computational resources, such as laptops, smartphones, and edge devices. This means you don't need a large budget to begin deploying and using language models for your business needs. This has opened up new opportunities for businesses and has encouraged more experimentation within the AI community.

Understanding Small Language Models

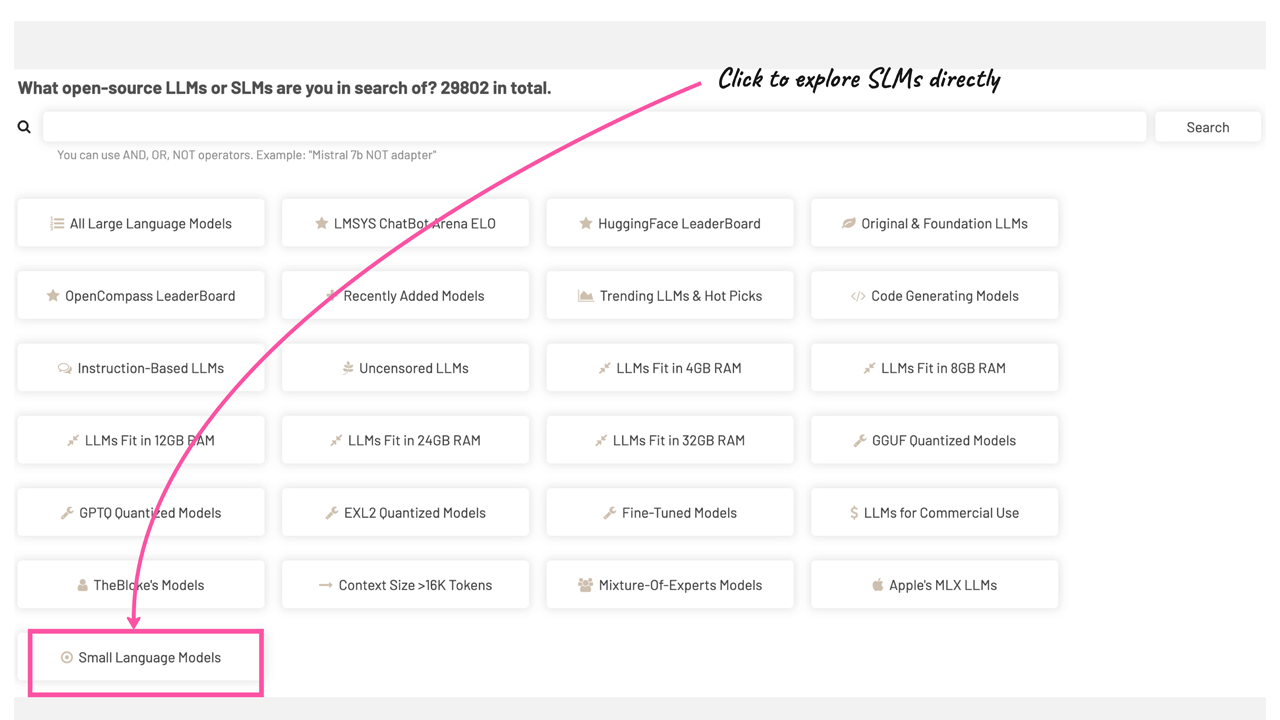

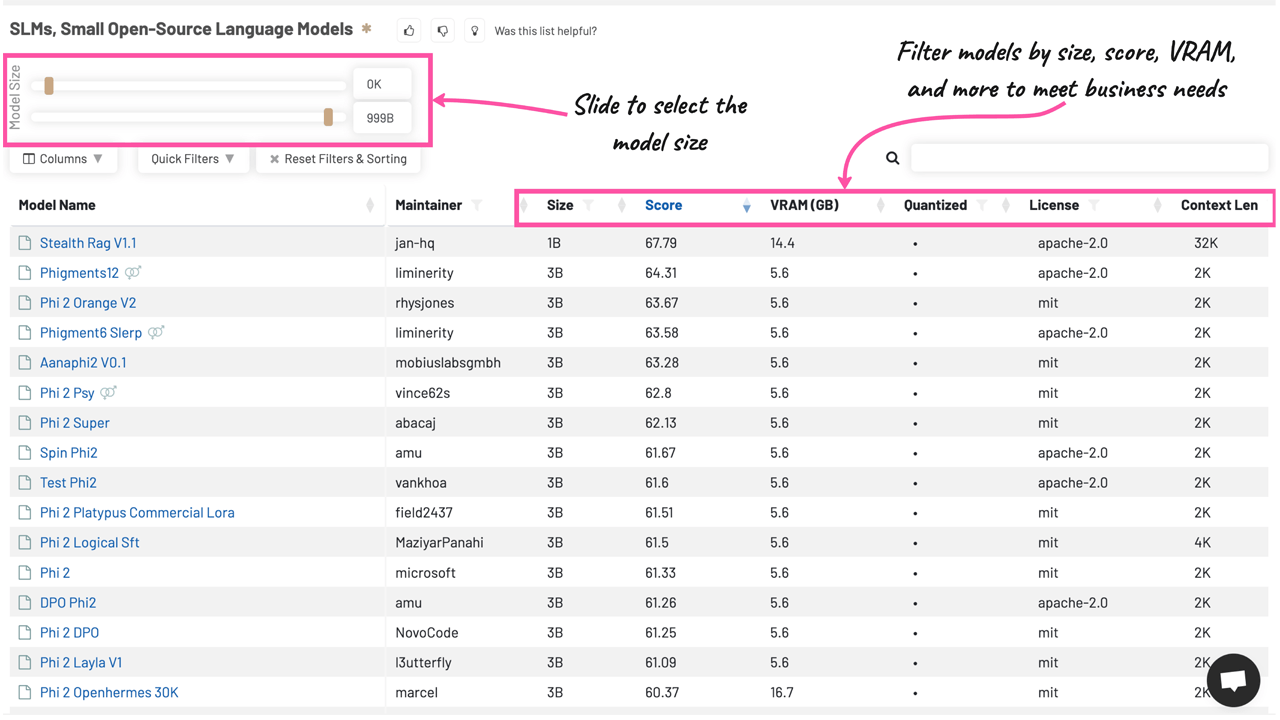

To discover a wider selection of small language models suitable for your business objectives, consider exploring the LLM Explorer. Currently, the LLM Explorer database includes over 30,000 open-source large and small language models. These models are systematically categorized and accompanied by benchmarks, analytics, news, and updates, providing a comprehensive resource for users.

With LLM Explorer, users can conveniently filter small language models based on criteria such as size, performance score, VRAM requirements, and more, ensuring a match with specific business needs.

Scope of Application

Despite their smaller size, small language models are highly effective when it comes to specific tasks and data sets. They are especially useful in specialized areas such as healthcare, finance, legal, and technical translation, providing targeted and relevant responses.

SLMs have a wide range of uses. They are great for creating chatbots, answering questions, summarizing texts, and generating content, particularly when there's a need to work within tight computational limits.

In healthcare, SLMs are improving the way care is delivered by making clinical documentation faster, identifying potential safety issues more efficiently, and enhancing telemedicine with smarter virtual assistants. They help make healthcare processes more efficient and secure, while also supporting the privacy and compliance needs critical to the sector.

Exploring Small Language Models: Pros and Cons

Small language models (SLMs) are known for their swift training and deployment times, and their minimal computational requirements make them cost-effective. These models are scalable and adaptable, making them suitable for various changing needs. They're transparent, simplifying the process of understanding and auditing their actions. Designed for precise tasks, they're adept at producing accurate outcomes and minimizing biases. Thanks to their streamlined architecture and fewer parameters, SLMs also pose a lower security risk in AI applications.

However, the compact size that makes SLMs accessible also restricts their capacity for complex language tasks. This limitation can impact their understanding of context and ability to provide detailed responses. While SLMs perform well within their designated scope, they might not achieve the same level of fluency and coherence as their larger counterparts, which learn from vast datasets.

In conclusion, small language models are notable for their practicality, making advanced AI more attainable for various uses. Although they face challenges with complex tasks, their strengths in efficiency, adaptability, and safety highlight their significance in the expanding realm of AI and natural language processing.