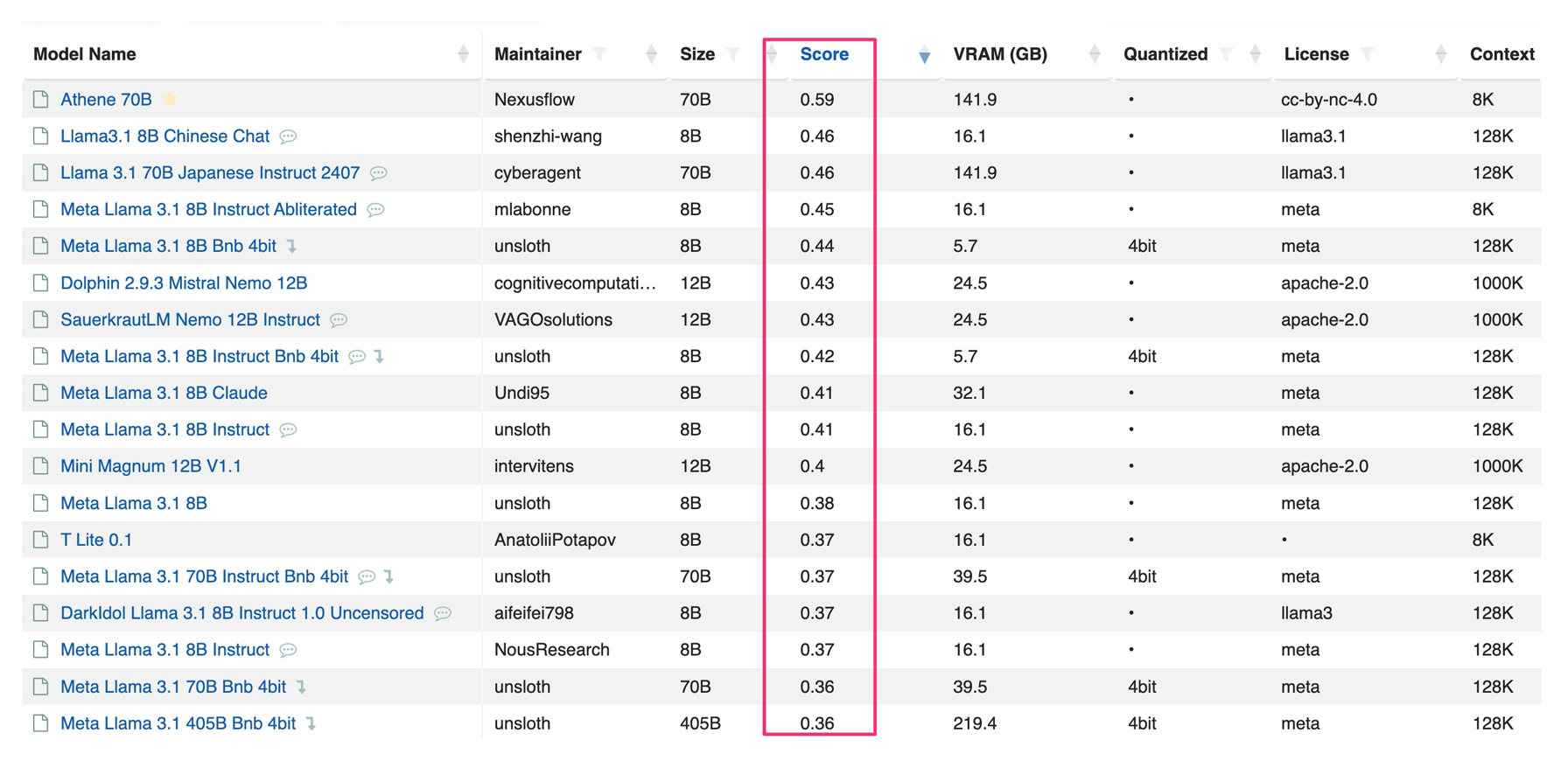

The LLM Explorer Rank: A Comprehensive Evaluation Metric for Language Models

28/07/2024 18:41:29

The LLM Explorer Rank (Score) is a comprehensive metric for dynamic evaluation of language models. It combines factors like popularity, recency, and expert ratings to provide a balanced assessment. The system uses normalized weights, logarithmic scaling, and a recency boost to ensure fair comparisons. It allows for flexible weighting and includes a method to compare quantized models. The rank enables users to evaluate models across various categories and criteria, making it useful for both researchers and developers. This approach aims to offer a more holistic view of language model performance than single-factor metrics.

Key Components of the LLM Explorer Rank

- Operational Relevance: The rank combines factors that are particularly relevant to practical implementation concerns:

- Popularity metrics indicate community support and potential for troubleshooting resources

- Recency helps in assessing the model's compatibility with current infrastructure and frameworks

- ELO scores and HuggingFace rankings provide insights into performance and quality

- Resource Optimization:

- The ranking system includes considerations for quantized models, which is crucial for teams optimizing for deployment on resource-constrained environments or edge devices.

- It allows for comparisons of models based on VRAM requirements, helping teams select models that fit their hardware constraints.

- Deployment Flexibility: The rank's weighting structure can be adjusted to prioritize factors most relevant to specific deployment scenarios, such as emphasizing efficiency for edge computing or prioritizing accuracy for critical applications.

- Integration Insights: By providing a unified comparison framework, the rank helps teams assess how different models might integrate with existing systems and workflows.

- Continuous Improvement Alignment: The inclusion of a recency factor aligns with principles of continuous improvement, encouraging teams to consider newer models that might offer performance or efficiency gains.

- Risk Assessment: Popularity metrics and expert evaluations incorporated in the rank can serve as proxies for model stability and reliability, crucial factors in risk assessment for production deployments.

- Scalability Considerations: The rank's methodology, which prevents highly popular models from dominating, helps teams discover potentially more efficient or specialized models that might better suit scalable architectures.

- Benchmark Complementarity: While providing a holistic view, the LLM Explorer Rank also directs users to specific benchmarks, allowing teams to perform deeper, task-specific evaluations when necessary.

For professionals working with language and small language models, the LLM Explorer Rank serves as a valuable tool in the model selection and deployment pipeline.

Recent Blog Posts

-

2024-08-03