UGI Leaderboard on LLM Explorer

08/04/2024 13:14:39

Noticing an increase in queries for 'uncensored models,' we've responded by adding a new Leaderboard focused on evaluating uncensored general intelligence (UGI) to our LLM Leaderboards Catalog.

The UGI Leaderboard is hosted on Hugging Face Spaces. It assesses models on their ability to process and generate content on sensitive or controversial topics, using a set of undisclosed questions to maintain the leaderboard’s effectiveness and prevent training bias. The assessment focuses on two scores: the UGI score, measuring a model's knowledge of uncensored information, and the W/10 score, gauging its willingness to engage with controversial topics.

To maintain the leaderboard's fairness and prevent any problems or criticism, the specific assessment questions are kept confidential. This approach ensures that the leaderboard remains a valuable tool for comparing the capabilities of models ranging from 1B to 155B in size without compromising on ethical standards.

User Feedback on the UGI Leaderboard

User feedback on the UGI Leaderboard confirms its utility and accuracy. Benchmarks align with users' expectations, making it a valuable reference tool. The leaderboard is recognized for reducing the time users spend searching for uncensored Large Language Models (LLMs), with a notable efficiency improvement. The general feedback suggests the leaderboard is a practical resource for the community, facilitating easier access and evaluation of LLMs.

Using LLM Explorer for Uncensored Models

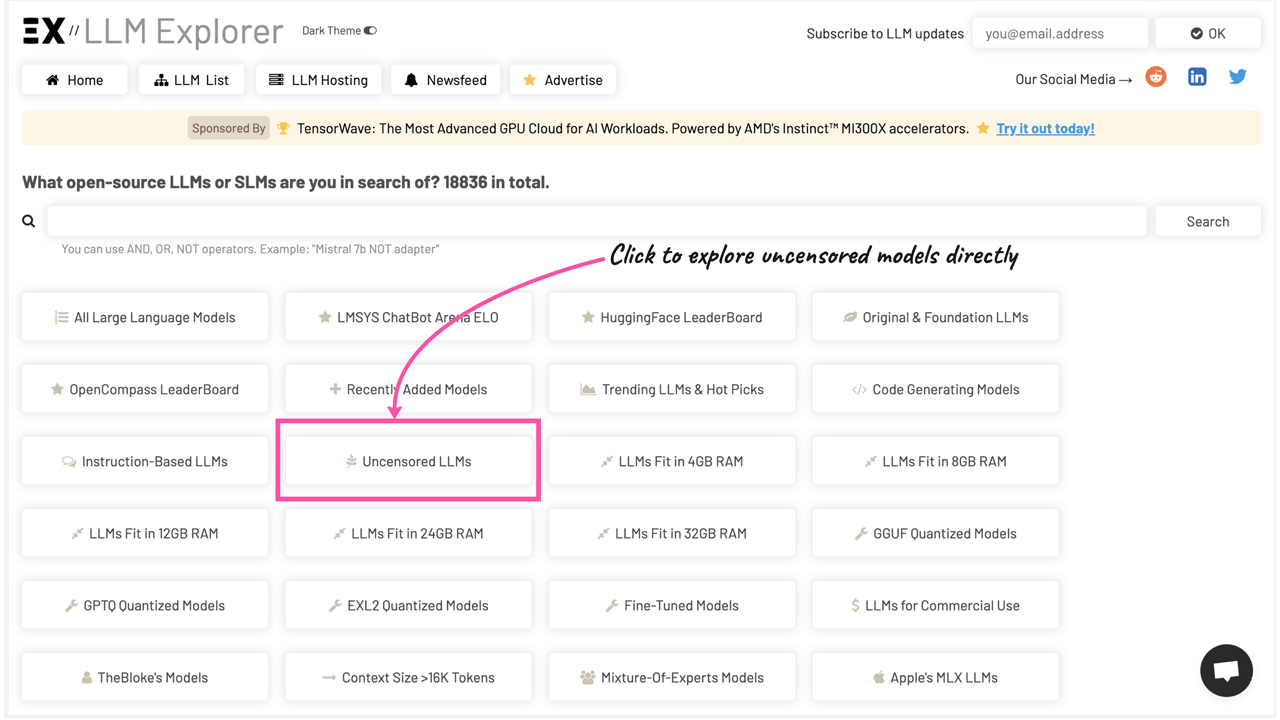

While the UGI Leaderboard offers a valuable way to explore uncensored LLMs and represents a significant contribution to the AI community, it doesn't encompass all uncensored LLMs. This is where LLM Explorer fills the gap with its specialized catalog of uncensored models for your business needs:

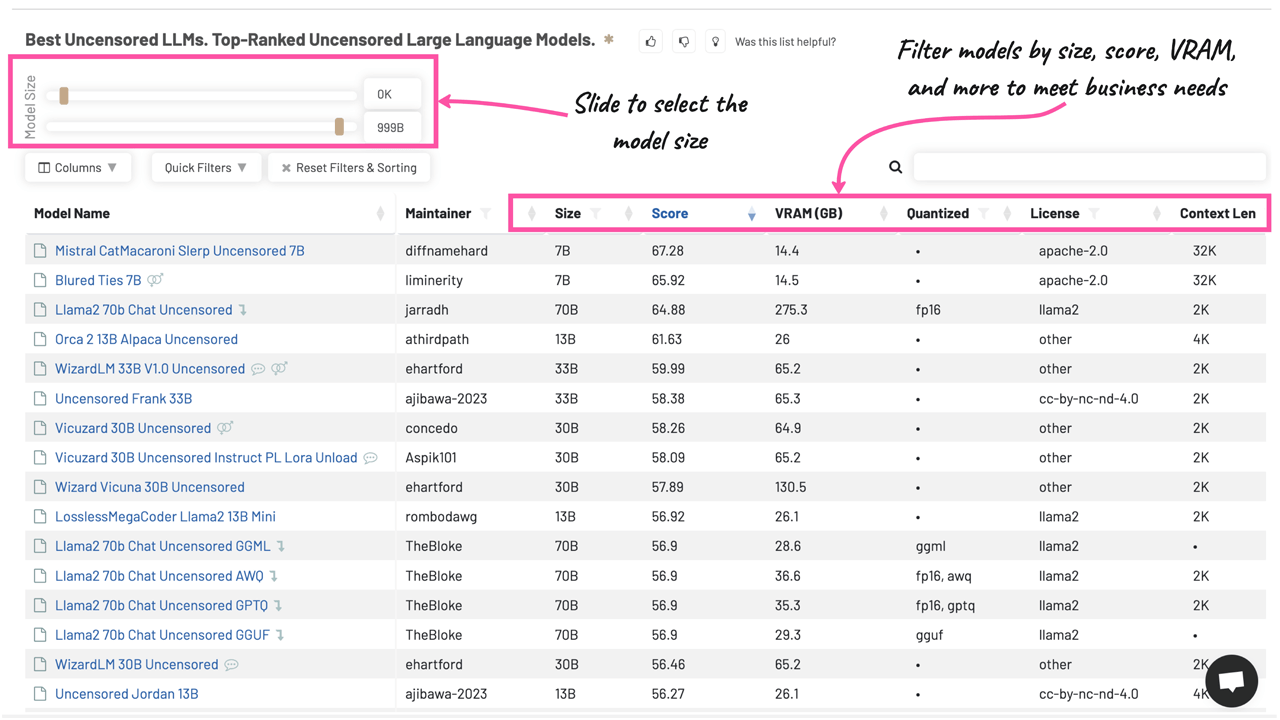

With an intuitive interface, LLM Explorer features a dedicated section for uncensored models, offering quick access to a wide range of options. It includes advanced filtering tools, allowing users to narrow down their choices based on model size, performance, VRAM requirements, availability of quantized versions, and commercial applicability. This functionality ensures that businesses can efficiently identify a model that meets their specific requirements.