What's the Deal with Solar Pro Preview Instruct?

16/09/2024 12:23:40

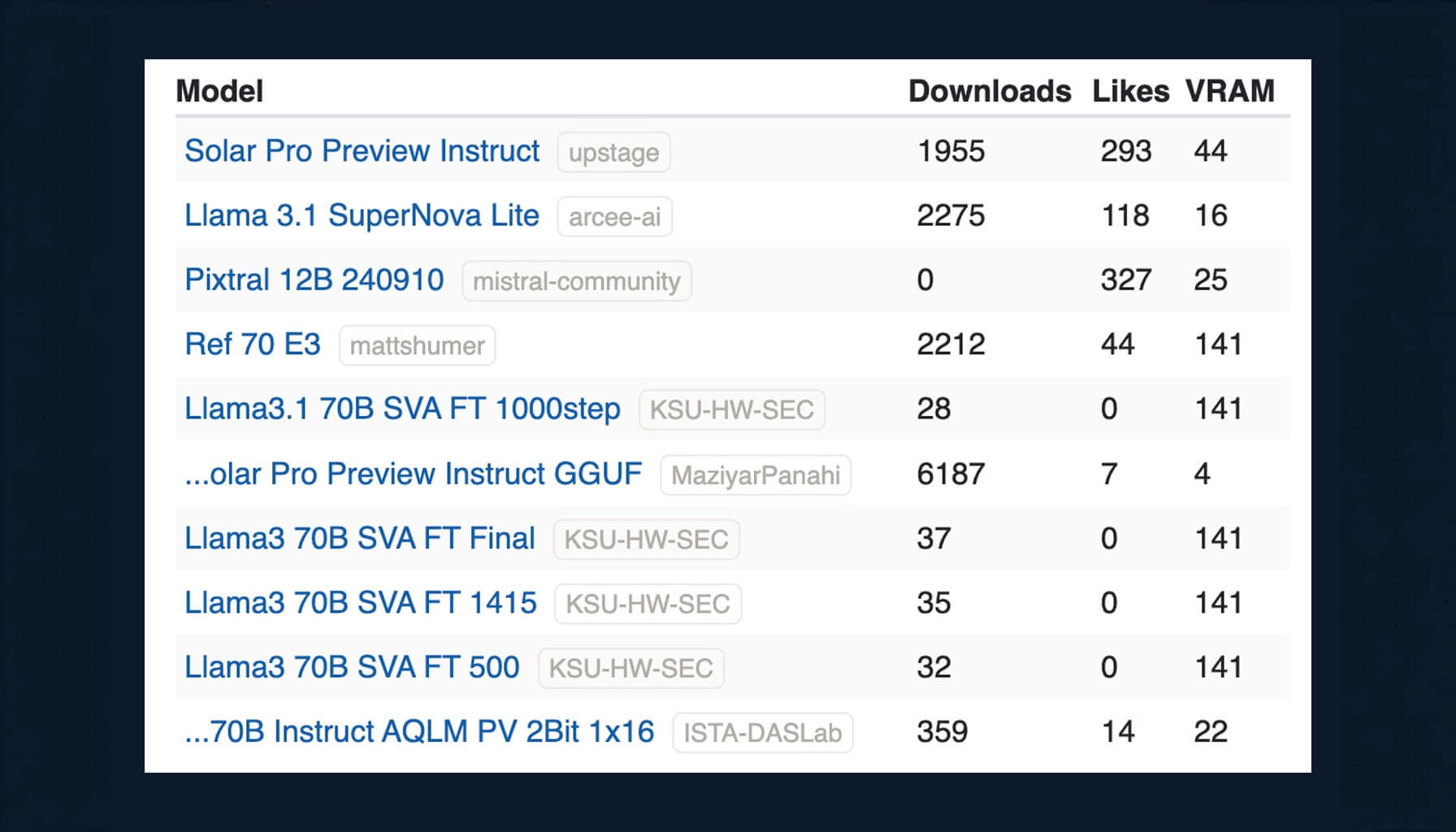

Welcome back to our ranking of the most TOP-Trending models over the past week. This ranking is based on the most downloaded and liked language models on Hugging Face and LLM Explorer data.

Topping our charts this week is Solar Pro Preview Instruct.

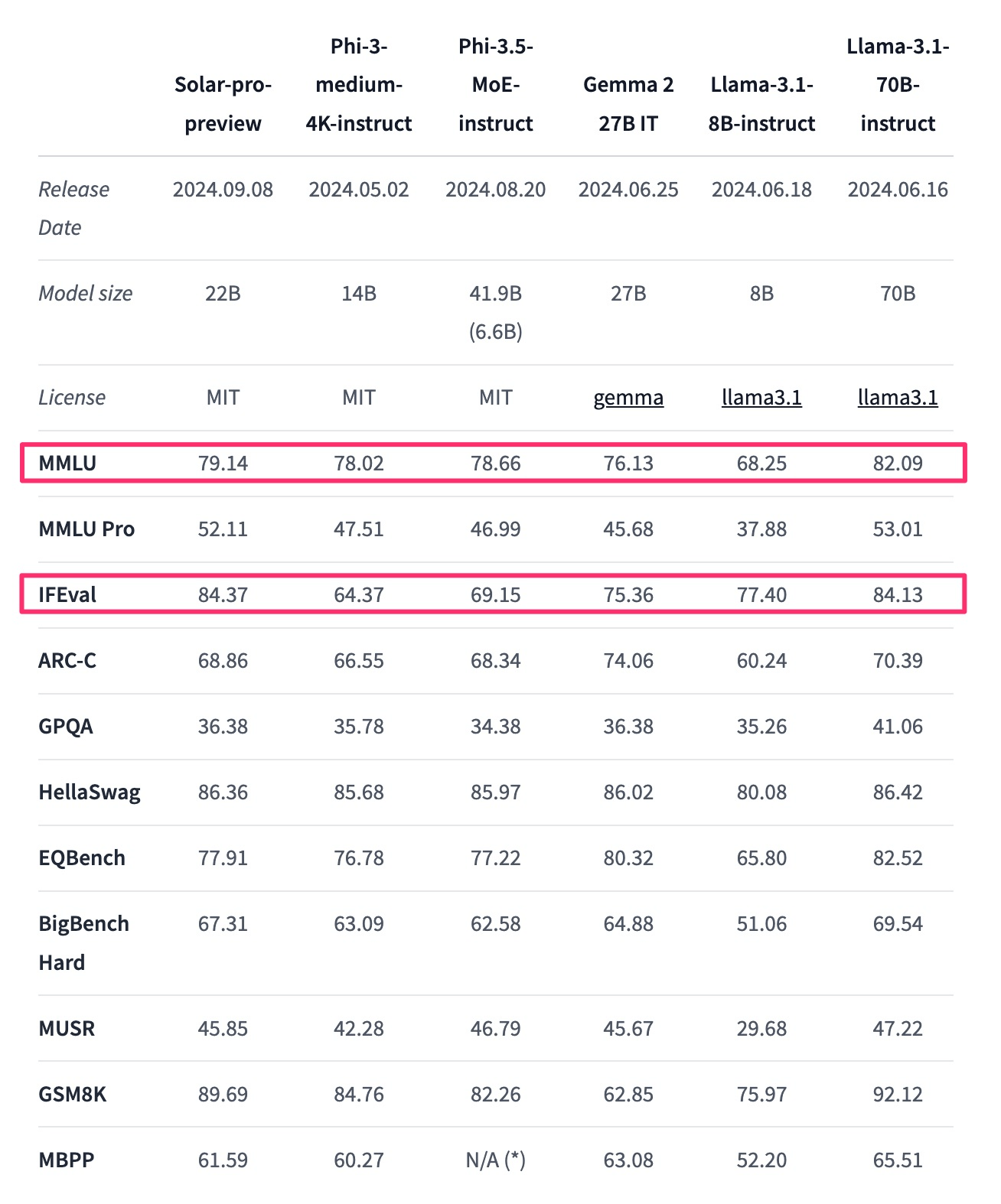

It's a 22-billion parameter model designed to fit on a single GPU with 80GB VRAM. Developed using enhanced depth up-scaling from the Phi-3-medium (14B) model, it has a 4K max context length in its preview version. The full release, Solar Pro, is slated for November 2024 with expanded capabilities. It uses the ChatML template for optimal instruction-following and outperforms models with less than 30B parameters, showing comparable performance to some 70B models. Notably, it demonstrates strong performance on MMLU-Pro and IFEval benchmarks:

The model is open-source with an MIT license.

What users think about it:

The 4K context in the preview version has received expected criticism from users, who find it limited compared to other recent models. While it's explicitly stated as a preview limitation, with promises of longer context windows in the November 2024 full release, some skepticism remains. The AI community is speculating whether the full "Pro" version will only be available through a paid API service upon release. This has led to questions about how accurately the current open-source preview represents the final product.

Despite strong benchmark performance, especially for its parameter count, there's caution about real-world performance. Without extensive testing, it's challenging to definitively assess its practical usability or personality. While the benchmark results are impressive for a 22B parameter model, independent testing is needed to verify its capabilities across diverse applications.

It's worth noting that these points largely represent user concerns and assumptions rather than confirmed facts 😎. We'll have to wait until November to see how Solar Pro truly performs.