Top-Trending LLMs Over the Last Week. Week #13.

26/03/2024 10:00:00

Following our previous week's roundup of Top-trending large language models (LLMs), here's the latest update on the AI models that have captured the community's attention from March 26, 2024. This week, we see new entries and significant updates, showcasing the dynamic and innovative landscape of AI development. We ranked LLMs by how many times they were downloaded and liked, based on information from Hugging Face and LLM Explorer.

1. Grok 1 Hf by Keyfan: An unofficial, dequantized adaptation of Grok-1, optimized for the Hugging Face Transformers format. Keyfan's contribution highlights the importance of precision and efficiency in model performance.

2. Mistral 7B V0.2 Hf by Alpindale: This instruct-based model introduces a larger token context window and performance optimizations, tailored for specific information retrieval and creative content generation.

3. Starling LM 7B Beta by Nexusflow: Featuring enhancements in reasoning tasks through Reinforcement Learning from AI Feedback, this model requires a specialized chat template to maintain its performance standards.

Community Insights

Feedback suggests that the beta version is more verbose compared to the alpha. Fans of Starling-LM valued its concise and direct approach, particularly for productivity tasks such as writing summaries and code assessments. While the additional detail might enhance certain applications, some users view it as a potential downgrade for tasks requiring brevity.

4. Cerebrum 1.0 8x7b by AetherResearch: Specialized in reasoning tasks, this model uses a chain of thought approach for effective problem-solving, demonstrating its prowess across various reasoning benchmarks.

Community Insights

People are excited and curious about what Cerebrum 8x7b can do, especially in reasoning. They compare it to top models like GPT 3.5 Turbo and Gemini Pro. While many appreciate its progress in AI, there are worries about its performance with long texts and repeated information, suggesting it could be better.

5. Grok 1 by HPC-AI Tech: Offers a PyTorch version of Grok-1, facilitating enhanced inference speed through the ColossalAI framework and compatibility with the original tokenizer.

6. Beyonder 4x7B V3 by Maxime Labonne: An improved MoE model combining four expert models for a versatile performance across tasks, showing significant advancements in efficiency and capability.

7. EvoLLM JP V1 10B by Sakana AI: A pioneering Japanese LLM developed through the Evolutionary Model Merge method, combining strengths from three source models for innovative applications.

8. EvoLLM JP V1 7B: Another entry of Sakana AI in the Japanese LLM space, highlighting its commitment to pushing the boundaries of language model development with collaborative efforts.

9. Rhea-72b-v0.5 by Davidkim205: Focuses on enhancing LLM performance through unique learning methods, including a novel dataset generation method for DPO learning.

10. WhiteRabbitNeo 7B V1.5a by WhiteRabbitNeo: A model designed for cybersecurity applications, emphasizing responsible use and covering a wide range of cybersecurity topics.

The trends this week point to an increased focus on specialized, efficient, and ethically minded AI development.

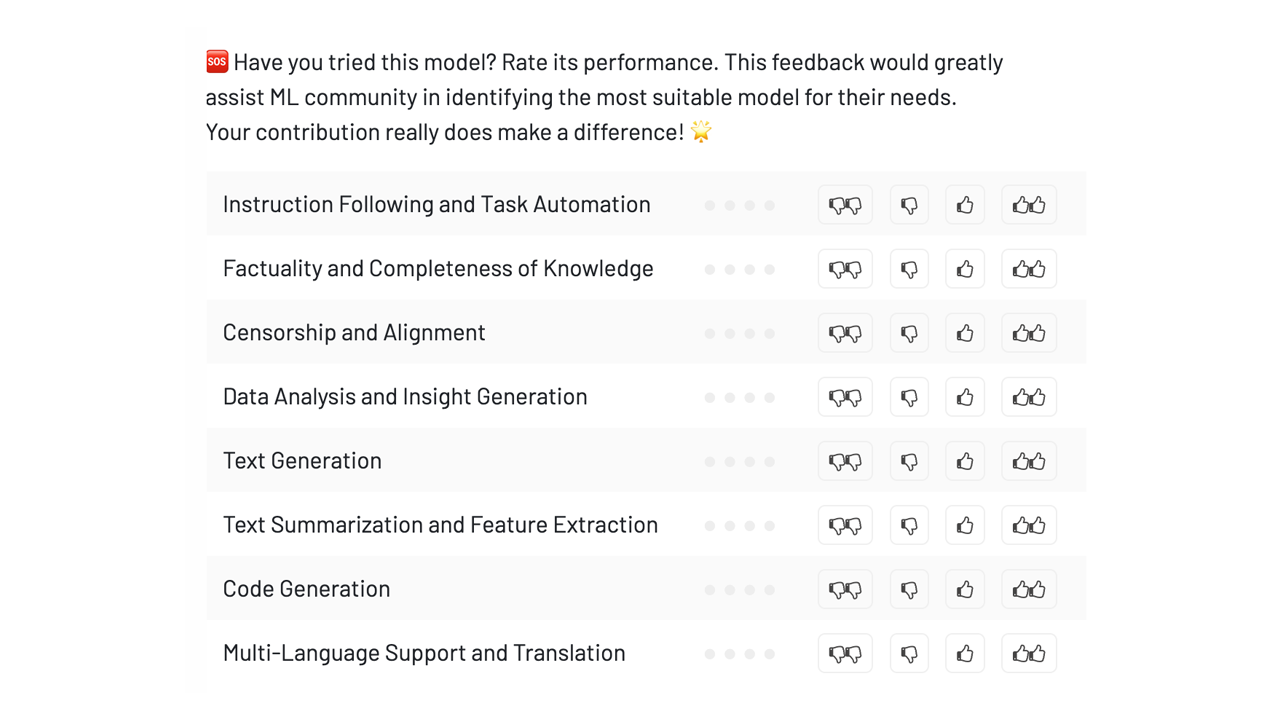

We deeply value your insights and invite you to share your model experiences and reviews on our platform. Your feedback helps the AI community make informed choices in the vast world of LLMs.

Stay tuned!